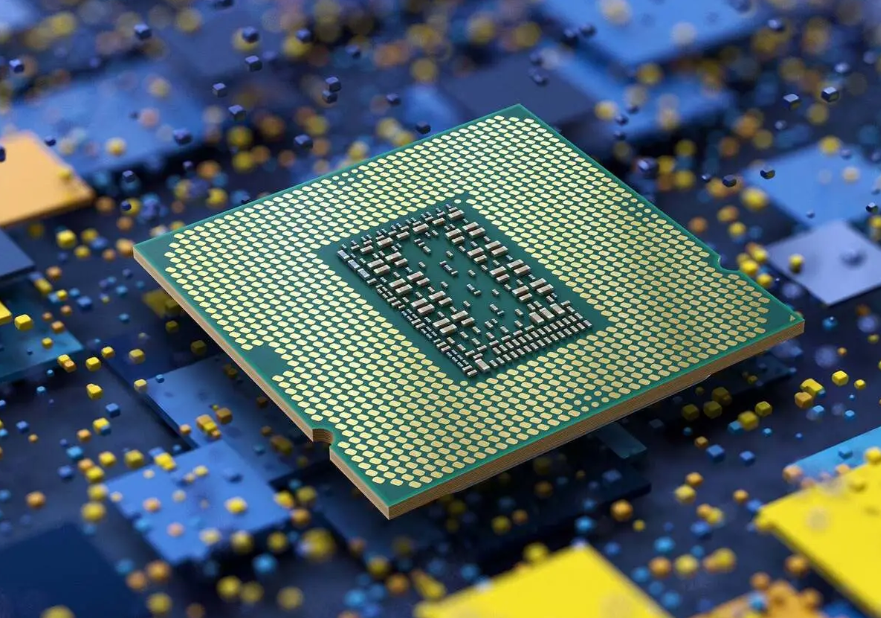

Some apps need CPU clock speed while others need multiple cores, so base your server purchases accordingly.

In reviewing CPU and server benchmarks, you’ve undoubtedly noticed that testing covers both single-core and multi-core performance. Here’s the difference.

In terms of raw performance, both are equally important, but single- and multi-core have areas of use where they shine. So when picking a CPU, it’s important to consider your particular workloads and evaluate whether single-core or multi-core best meets your needs.

Single-core CPUs

There are still a lot of applications out there that are single-core limited, such as many databases (although some, like MySQL, are multicore).

Performance is measured in a couple of ways. Clock frequency is the big one; the higher the frequency the faster apps will run. Also important is the width of execution pipelines, and the wider the pipeline, the more work can get done per clock cycle. So even if an app is single threaded, a wider pipeline can improve its performance.

Multi-core CPUs

Multi-core benchmarking often entails running multiple apps in parallel rather than bringing multiple cores to bear on a single application. Each app runs on a separate core without having to wait its turn as it would with a single core.

Many chips targeting cloud providers and large enterprise have 96 (AMD Epyc “Genoa”) to 128 (Ampere AltraMax) cores. The more users and more virtual machines, the more cores to handle the load.

Per-core pricing

These very large chips are typically used to run multi-teneant workloads, including containers and virtual maches, said Patrick Kennedy, president and editor of Serve The Home, an independent testing site for SMB to enterprise server gear.

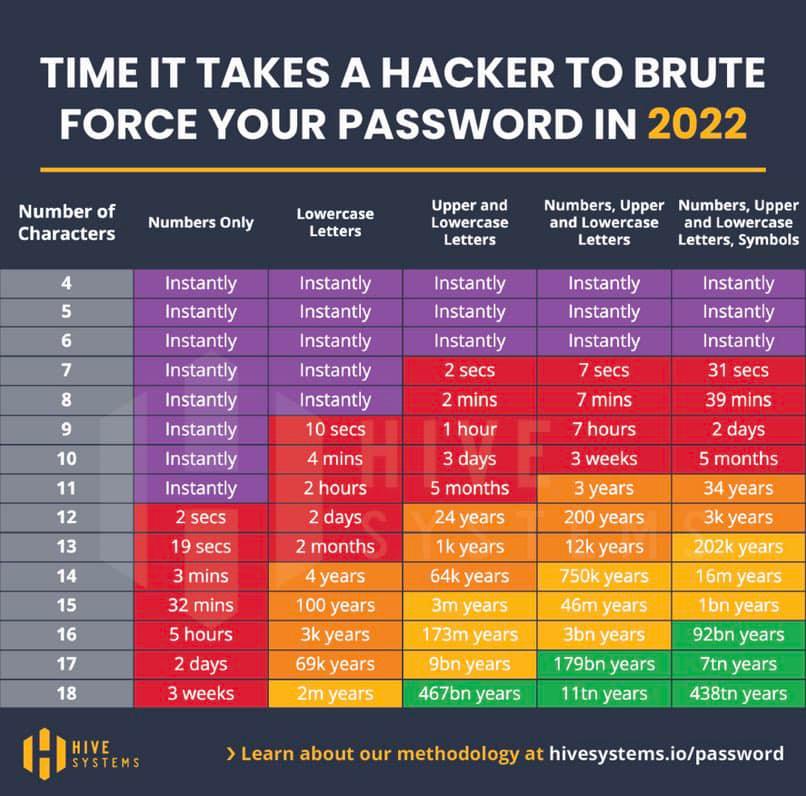

Because chips are licensed per core, enterprises should seek the highest performance per core in order to minimize license fees, he said. A lot of the demand for single-core performance is to get around these fees.

Cores Getting Some Help

After years of AMD lagging behind Intel in both single- and multi-core performance, the two are now equal in both benchmarks, Kennedy says. “I’d say Intel and AMD are very much interchangeable in most applications. But I think that there’s probably that 10%-15% cases where they’re just vastly different,” he said.

For example, in any scenario where memory bandwidth was limited, he would use AMD Epyc processors over an Intel Xeon because Epycs have enormous caches, and going to cache is faster than going to main memory.

“For a general purpose, enterprise workload, I think realistically, you could use either [Intel or AMD]. But I would generally tell people, at this point, it’s probably worth trying one of each, and making a decision based on your workload,” said Kennedy.

The performance of CPUs alone is no longer the deciding factor. Servers are increasingly being augmented by accelerators like GPUs, FPGAs, and AI processors that offload tasks from the CPU in order to speed up the system as a whole.

For example, in anything having to do with VPN termination, Kennedy said he would “100 percent” use an Intel processor with a QuickAssist crypto/compression offload card because it lifts a big load off the CPU. On the other hand, if he was doing something that was memory bandwidth limited, he would use AMD Epyc chips because Epycs have enormous caches.

Join the Network World communities on Facebook and LinkedIn to comment on topics that are top of mind.

Related:

Andy Patrizio is a freelance journalist based in southern California who has covered the computer industry for 20 years and has built every x86 PC he’s ever owned, laptops not included.